Black Art Revisited: Sitecore DataProvider/Import Hybrid with MongoDB

tl;dnr -- Combine data import and DataProvider approaches for great justice. Find the source on GitHub.

My Black Art of Sitecore DataProviders article still gets a lot of hits, which to some degree makes sense -- creating a DataProvider hasn't really changed since Sitecore 6.0 (though I very much need to explore pipeline-based item providers in Sitecore 8). But if I've learned anything myself since then, it's that there are actually very limited circumstances in which you want to implement a DataProvider. Why's that?

- They're difficult to implement

- It's difficult to invalidate Sitecore caches when data is updated

- It's difficult to trigger search index updates when data is updated

- You can't enrich the content or metadata in Sitecore (e.g. Analytics attributes)

- You are dependent at runtime on the source system

- They're even more difficult to implement in a way that performs well

So the fallback is usually an import via the Item API. But I've never really seen anyone happy with this either, especially for large imports. Why's that?

- Writing data to Sitecore is SLOW. Even with a BulkUpdateContext.

- Publishing can be very slow as well, though Sitecore 7.2 improved this significantly.

- Usually you end up using a BulkUpdateContext, which means needing to trigger a search index rebuild, and potentially a links database rebuild, after every import.

- Scheduled updates mean that it can be hours and hours before a data change in the source system is reflected in Sitecore.

Is there a way to integrate data with Sitecore that balances the immediacy of a data provider, with the simplicity and enrichment ability of an import? Maybe! I'm presenting here a POC that combines the two approaches to try and achieve just that. We do this by introducing an intermediary data store that you may already have in your Sitecore 7.5 or 8 environment -- MongoDB.

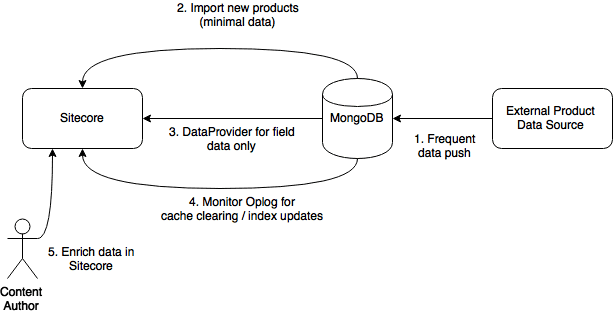

The basic process:

- Data is pushed frequently from an external system into MongoDB. Writing data to MongoDB should be quick and easy, so it can be done very often, in theory. Maybe the data is already in MongoDB, in which case you are set.

- New items (products in this case) are imported frequently into Sitecore. This can be done quickly and more often, in theory, because we are implementing minimal data -- just creating the item, and populating a field with an external ID.

- We use a DataProvider with a simple implementation of GetItemFields to provide field data for the item directly from the MongoDB.

- To ensure caches are cleared and indexes are updated when data changes, we monitor the MongoDB oplog, a collection that MongoDB maintains to help synchronize data between replica sets.

- Content editors can enrich data on the item as needed. Externally managed fields can be denied Field Write to prevent futile edits.

So, does this work? Glad you asked. I put together a POC and recorded a walkthrough, which you can find below. In the video, I go into more detail on import vs data provider, and some of the potential gotchas of the hybrid approach.

Again, this is all theoretical. Has not been attempted in a production implementation. But I do think there is potential here, especially given that MongoDB is going to be found in more and more Sitecore environments going forward. Feedback is welcome, as are pull requests. :)

Full source code can be found on GitHub.